In conjunction

with computers, the term conferencing

is often used in two different ways. One refers to bulletin boards and mail

list asynchronous exchanges of

messages between multiple users; secondly, one may refer to synchronous or so-called real-time conferencing, including

audio/video communication and shared tools such as whiteboards and other

applications. This document is about

the architecture for this latter application, multimedia conferencing in an Internet

environment. There are other

infrastructures for teleconferencing in the world: POTS (Plain Old Telephone

System) networks often provide voice conferencing and phone-bridges, while with

ISDN, H.320 [h320] can be used for small, strictly organised video-telephony

conferencing. This latter infrastructure will be called ITU Conferencing in this report.

The use of the

term architecture in the title of this document should be understood neither as

meaning plans for building specific software nor an inflexible environment in

which conferencing must operate; it is a description of the context in which

the project is working and the requirements for individual elements of that

conferencing environment. It also includes the type of components which the

MECCANO partners are committed to provide. Moreover, this document does not

intend to give a complete bibliography of multimedia conferencing, or even

Mbone multicast conferencing tools. It references mainly those used within the

MECCANO project. We use directly many tools deriving from the earlier

activities of the Lawrence Berkeley Laboratories (LBL) [lbl] and the European

projects MICE [mice] and MERCI [merci]. We are fully aware of the later work of

MASH [mash], MATES [mates] and MARRATECH [marra]. The first is insufficiently

modular for us to include parts; the commercial nature of the latter two has

made it difficult to integrate them with parts of our system, in order to

provide the extension we need for security, loss-resistance and multiple

platforms.

Most of this

Deliverable is about the architecture of multicast conferencing and data

delivery as being developed in the Internet Engineering Task Force [ietf]. The Mbone is used to describe that portion

of the Internet, which supports multicast data distribution; while this is currently

being used mainly for conferencing and the distribution of multimedia

broadcasting, there are many other uses of the technology. For the sake of

notation only, this form of conferencing will be called Mbone Conferencing. There used to be a clean divide between this

conferencing architecture and that being pursued in the ITU as exemplified by

H.320 for ISDN conferencing; the former used the Internet, the latter the ISDN

or POTS. Recently the distinction has become somewhat blurred, because at the

lower transport levels the ITU has adopted the same procedures as IETF [h323];

this means that ITU-style conferences can also use the Internet. There are

still some differences, but there is also clear overlap. The architectural

environment of the ITU mechanisms do not scale as well, but for small, tightly

controlled, conferences they are a quite viable alternative.

Since most of the

MECCANO project is about the use of Mbone conferencing, more detail will be

given of this technology. However, one part of the project is concerned with

the provision of facilities to allow ITU-conferencing workstations to join

Mbone conferences and to allow Mbone-conferencing workstations to join ITU

conferences. For this reason enough detail will be provided to define the

requirements for the gateways needed. In addition, we will attempt to indicate

over what range of parameters we consider it desirable to use the ITU

conferencing concepts - and even where some of those concepts could be added to

Mbone conferencing.

Sections 2, 3, 4

and 6 are based on “The Internet Multimedia Conferencing Architecture”, an

overview of Mbone conferencing being prepared both as a paper and a submission

to the MMUSIC group of the IETF [han99-3]. There are some differences between

the current draft of that paper and the relevant sections of this document.

These reflect that the purpose of this Deliverable is to define the MECCANO

architecture. This is closely aligned to the activity of the MMUSIC group, but

there are differences of emphasis. Moreover, we address issues that are not

addressed in that paper, and often are not even appropriate to the IETF. There

are two reasons for referring to that here. First we wish to acknowledge our

substantial debt to the authors of [han99-3]; secondly, we do not imply that

all the statements here have the approval of the authors of [han99-3].

The Mbone

architecture is only one of the architectures being considered in the MECCANO

project. Another is the ITU one, exemplified by H.323. This follows a much more

conventional ITU approach, which is sender driven. An earlier complete

independence of protocol structures in the H.320 family has become more similar

in the H.323 version because both use the same underlying transport protocols.

The methods of control and initiation are very different, but it is meaningful

to consider H.323 workstations joining Mbone conferences and vice-versa -

without undue processing on the media streams themselves during the running

conferences. Section 7 gives the salient properties of the ITU architecture.

There are many

other areas being explored in the project, most of which have architectural

considerations. In Section 8 we consider the various gateways being considered;

some of these are between the Mbone and the ITU worlds; others are between

different types of technology. Others are concerned with overcoming the

justified fears that many organisations have to allowing multicast into their

organisations. In Section 9 we consider the mechanisms which are required to

provide privacy in conferences, and to allow authentication both of

participants and the activities of conference organisers.

Other sections

deal with the architectural implications of various tools and components. Thus

in Section 3 we consider aspects of supporting various network types, in

Sections 4 and 5 we consider the architectures of the Mbone tools and in

Section 10 those of the recording and replay media servers. Finally, some

conclusions are drawn in Section 11.

The architecture

that has evolved in the Internet is general as well as being scalable to very

large groups; it permits the open introduction of new media and new

applications as they are devised. As the simplest case, it also allows two

persons to communicate via audio only; i.e. it encompasses IP telephony.

The determining

factors of conferencing architecture are communication between (possibly large)

groups of humans and real-time delivery of information. In the Internet, this is supported at a

number of levels. The remainder of this

section provides an overview of this support, and the rest of the document

describes each aspect in more detail.

In a conference,

information must be distributed to all the conference participants. Early conferencing systems used a fan-out of

data streams, e.g., one connection between each pair of participants, which

means that the same information must cross some networks more than once. The

Internet architecture uses the more efficient approach of multicasting the

information to all participants (cf. Section 3.1).

Multimedia

conferences require real-time delivery of at least the audio and video

information streams used in the conference.

In an ISDN context, fixed rate circuits are allocated for this purpose -

whether their bandwidth is required at any particular instance or not. On the

other hand, the traditional Internet service model (best effort) cannot make the necessary Quality of Service (QoS)

available in congested networks. New

service models are being defined in the Internet together with protocols to

reserve capacity or prioritise traffic in a more flexible way than that

available with circuit switching (cf. Section 3.2).

In a datagram

network, multimedia information must be transmitted in packets, some of which

may be delayed more than others. In order that audio and video streams be

played out at the recipient in the correct timing, information must be

transmitted that allows the recipient to reconstitute the timing. A transport

protocol with the specific functions needed for this has been defined (cf.

Section 4.1). The Internet is a very

heterogeneous world. Techniques exist to exploit this, and to deliver

appropriate quality to different participants in the same conference according to

their capabilities.

The humans

participating in a conference generally need to have a specific idea of the

context in which the conference is happening, which can be formalised as a

conference policy. Some conferences are essentially crowds gathered around an

attraction, while others have very formal guidelines on who may take part

(listen in) and who may speak at which point.

In any case, initially the participants must find each other, i.e.

establish communication relationships (conference set-up, Section 6.2).

During the conference, some conference control information is exchanged

to implement a conference policy or at least to inform the participants of who

is present.

In addition,

security measures may be required to actually enforce the conference policy,

e.g. to control who is listening and to authenticate contributions as

purporting to originate from a specific person. In the Internet, there is little tendency to rely on the

traditional security of distribution

offered e.g. by the phone system.

Instead, cryptographic methods are used for encryption and

authentication, which need to be supported by additional conference set-up and

control mechanisms (See Section 9).

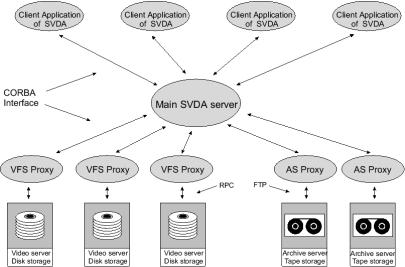

Figure 1 Internet

multimedia conferencing protocol stacks

Most of the

protocol stacks for Internet multimedia conferencing are shown in Fig. 1. Most of the protocols are not deeply

layered, unlike many protocol stacks, but rather are used alongside each other

to produce a complete conference. For secure conferencing, there may be

additional protocols for group management. This question is addressed in

Section 9.

IP multicast

provides efficient many-to-many data distribution in an Internet

environment. It is easy to view IP

multicast as simply an optimisation for data distribution; indeed this is the

case, but IP multicast can also result in a different way of thinking about

application design. To see why this

might be the case, examine the IP multicast service model, as described by Jacobson

[jacobson95]:

·

Senders just send to the group

·

Receivers express an interest in receiving data sent to the group

·

Routers conspire to deliver data from senders to receivers

With IP

multicast, the group is indirectly identified by a single IP class-D multicast

address.

Several things

are important about this service model from an architectural point of

view. Receivers do not need to know who

or where the senders are to receive traffic from them. Senders never need to know who the receivers

are. Neither senders nor receivers need

care about the network topology as the network optimises delivery.

The level of

indirection introduced by the IP class D address denominating the group solves

the distributed systems binding problem, by pushing this task down into

routing. Given a multicast address (and UDP port), a host can send a message to

the members of a group without needing to discover who they are. Similarly receivers can tune in to multicast data sources without needing to bother the

data source itself with any form of request.

IP multicast is a

natural solution for multi-party conferencing because of the efficiency of the

data distribution trees, with data being replicated in the network at

appropriate points rather than in end-systems.

It also avoids the need to configure special-purpose servers to support

the session; such servers require support, cause traffic concentration and can

be a bottleneck. For larger

broadcast-style sessions, it is essential that data-replication is carried out

in a way that requires only that per-receiver network-state is local to each

receiver, and that data-replication occurs within the network. Attempting to configure a tree of

application-specific replication servers for such broadcasts rapidly becomes a multicast routing problem; thus native

multicast support is a more appropriate solution.

There are a

number of IETF documents outlining the requirements of Hosts and multicast

routing. The most important, defining the Host extensions for IP multicast, are

[ipm]. There are many mechanisms, which have been proposed for multicast

routing; some of these are described in [dvmrp], [pimsm], [pimdm] and [bal98].

It is beyond the scope of this Deliverable to discuss the differences and

advantages of the different proposals.

There is an

important question on how an application chooses which multicast address to

use. In the absence of any other information, we can bootstrap a multicast

application by using well-known multicast addresses. Routing (unicast and multicast) and group membership protocols

[deer88-1] can do just that. However,

this is not the best way of managing applications of which there is more than

one instance at any one time.

For these, we

need a mechanism for allocating group addresses dynamically, and a directory

service which can hold these allocations together with some key (session

information for example - see later), so that users can look up the address

associated with the application. The

address allocation and directory functions should be distributed to scale well.

Multicast address

allocation is currently an active area of research. For many years multicast

address allocation has been performed using multicast session directories (cf.

Section 6.2), but as the users and uses of IP multicast increase, it is

becoming clear that a more hierarchical approach is required.

An architecture

[han99-2] is currently being developed based around a well-defined API that an

application can use to request an address.

The host then requests an address from a local address allocation

server, which in turn chooses and reserves an unallocated address from a range

dynamically allocated to the domain. By

allocating addresses in a hierarchical and topologically sensitive fashion, the

address itself can be used in a hierarchical multicast routing protocol

currently being developed (BGMP, [thal98]) that will help multicast routing

scale more gracefully that current schemes.

A number of

specific documents giving methods for address allocation are given in [estr99]

and [phil98]. It is relevant also to consider the extensions required to

resource discovery protocols using multicast [patel99].

Traditionally the

Internet has provided so-called best-effort

delivery of datagram traffic from senders to receivers. No guarantees are made regarding when or if

a datagram will be delivered to a receiver, however, datagrams are normally

only dropped when a router exceeds a queue size limit due to congestion. The best-effort Internet service model does

not assume FIFO queuing, although many routers have implemented this.

With best-effort

service, if a link is not congested, queues will not build at routers,

datagrams will not be discarded in routers, and delays will consist of

serialisation delays at each hop plus propagation delays. With sufficiently fast link speeds,

serialisation delays are insignificant compared to propagation delays. For slow

links, a set of mechanisms has been defined that helps minimise serialisation

and link access delay is low.

If a link is

congested, with best-effort service, queuing delays will start to influence

end-to-end delays, and packets will start to be lost as queue size limits are

exceeded. High quality real-time

multimedia traffic does not cope well with packet loss levels of more than a

few percent unless steps are taken to mitigate its effects. One such step is

the use of redundant encoding [redenc] to raise the level at which loss becomes

a problem. In the last few years a

significant amount of work has also gone into providing non-best-effort

services that would provide a better assurance that an acceptable quality

conference will be possible.

Real-time

Internet traffic is defined as that carried by datagrams that are delay

sensitive. It could be argued that all

datagrams are delay sensitive to some extent, but for these purposes we refer

only to datagrams where exceeding an end-to-end delay bound of a few hundred

milliseconds renders the datagrams useless for the purpose they were intended. For the purposes of this definition, TCP

traffic is normally not considered to be real-time traffic, although there may

be exceptions to this rule.

On congested

links, best-effort service queuing delays will adversely affect real-time

traffic. This does not mean that

best-effort service cannot support real-time traffic - merely that congested

best-effort links seriously degrade the service provided. For such congested links, a

better-than-best-effort service is desirable. To achieve this, the service

model of the routers can be modified. FIFO queuing can be replaced by packet

forwarding strategies that discriminate different flows of traffic. The idea

of a flow is very general. A flow might

consist of all marketing site web traffic,

or all fileserver traffic to and from

teller machines. On the other hand,

a flow might consist of a particular sequence of packets from an application in

a particular machine to a peer application in another particular machine set up

on request, or it might consist of all packets marked with a particular

Type-of-Service bit.

There is really

a spectrum of possibilities for non-best-effort service something like that

shown in Fig. 2.

Figure 2

Spectrum of Internet service types

This spectrum is

intended to illustrate that between best-effort and hard per-flow guarantees

lie many possibilities for non-best-effort service. These include having hard

guarantees based on an aggregate reservation, assurances that traffic marked

with a particular type-of-service bit will not be dropped so long as it remains

in profile, and simpler prioritisation-based services.

Towards the right

hand side of the spectrum, flows are typically identifiable in the Internet by

the tuple: source machine, destination

machine, source port, destination port, protocol, any of which could be

“ANY” (wildcarded).

In the multicast

case, the destination is the group, and can be used to provide efficient

aggregation.

Flow

identification is called classification; a class (which can contain one or more

flows) has an associated service model applied. This can default to best effort.

Through network

management, we can imagine establishing classes of long-lived flows. For

example, Enterprise networks (Intranets)

often enforce traffic policies that distinguish priorities which can be used to

discriminate in favour of more important traffic in the event of overload

(though in an underloaded network, the effect of such policies will be

invisible, and may incur no load/work in routers).

The router

service model to provide such classes with different treatment can be as simple

as a priority queuing system, or it can be more elaborate.

Although

best-effort services can support real-time traffic, classifying real-time

traffic separately from non-real-time traffic, and giving real-time traffic

priority treatment, ensures that real-time traffic sees minimum delays. Non-real-time TCP traffic tends to be

elastic in its bandwidth requirements, and will then tend to fill any remaining

bandwidth.

We could imagine

a future Internet with sufficient capacity to carry all of the world's

telephony traffic (POTS). Since this is

a relatively modest capacity requirement, it might be simpler to establish POTS as a static class, which is given

some fraction of the capacity overall. In that case, within the backbone of the

network no individual call need be given an allocation. We would no longer need

the call set-up/tear down that was needed in the legacy POTS; this was only

present due to under-provisioning of trunks, and to allow the trunk exchanges

the option of call blocking. The vision

is of a network that is engineered with capacity for all of the non-best-effort

average load sources to send without needing individual reservations.

3.2.2.1

RSVP

For flows that

may take a significant fraction of the network (i.e. are special and cannot just be lumped under a static class), we need a

more dynamic way of establishing these classifications. In the short term, this

applies to many multimedia calls since the Internet is largely under-provisioned

at the time of writing. RSVP has been standardised for just this purpose. It

provides flow identification and classification. Hosts and applications are

modified to speak RSVP client language, and routers speak RSVP.

Since most

traffic requiring reservations is delivered to groups (e.g. TV), it is natural

for the receiver to make the request for a reservation for a flow. This has the

added advantage that different receivers can make heterogeneous requests for

capacity from the same source. Thus

RSVP can accommodate monochrome, colour and HDTV receivers from a single source

(also see Section 4.2). Again the routers conspire to deliver the right flows

to the right locations. RSVP accommodates the wildcarding noted above.

If a network is

provisioned such that it has excess capacity for all the real-time flows using

it, a simple priority classification ensures that real-time traffic is

minimally delayed. However, if a

network is insufficiently provisioned for the traffic in a real-time traffic class,

then real-time traffic will be queued, and delays and packet loss will

result. Thus in an under-provisioned

network, either all real-time flows will suffer, or some of them must be given

priority.

RSVP provides a

mechanism by which an admission control request can be made, and if sufficient

capacity remains in the requested traffic class, then a reservation for that

capacity can be put in place. If insufficient capacity remains, the admission

request will be refused, but the traffic will still be forwarded with the

default service for that traffic's traffic class. In many cases even an admission request that failed at one or

more routers can still supply acceptable quality as it may have succeeded in

installing a reservation in all the routers that were suffering

congestion. This is because other

reservations may not be fully utilising their reserved capacity in those

routers where the reservation failed.

A number of

specific documents describing the RSVP protocols are: [rsvp], [rsvp-cls] and

[rsvp-gs]

3.2.2.2

Billing

If a reservation

involves setting aside resources for a flow, this will tie up resources so that

other reservations may not succeed; then, depending on whether the flow fills

the reservation, other traffic may be prevented from using the network. Clearly some negative feedback is required

in order to prevent pointless reservations from denying service to other

users. This feedback is typically in

the form of billing.

Billing requires

that the user making the reservation be properly authenticated so that the

correct user can be charged. Billing

for reservations introduces a level of complexity to the internet that has not

typically been experienced with non-reserved traffic, and requires network

providers to have reciprocal usage-based billing arrangements for traffic

carried between them. It also suggests

the use of mechanisms whereby some fraction of the bill for a link reservation

can be charged to each of the downstream multicast receivers.

Whereas RSVP asks

routers to classify packets into classes to achieve a requested quality of

services, it is also possible to explicitly mark packets to indicate the type

of service required. Of course, there

has to be an incentive and mechanisms to ensure that high-priority is not set by everyone in all packets; this incentive

is provided by edge-based policing and by buying profiles of higher priority

service. In this context, a profile

could have many forms, but a typical profile might be a token-bucket filter

specifying a mean rate and a bucket size with certain time-of-day restrictions.

This is still an

active research area, but the general idea is for a customer to buy from their

provider a profile for higher quality service, and the provider polices marked

traffic from the site to ensure that the profile is not exceeded. Within a provider's network, routers give

preferential services to packets marked with the relevant type-of-service

bit. Where providers peer, they arrange

for an aggregate higher-quality profile to be provided, and police each other's

aggregate if it exceeds the profile. In

this way, policing only needs to be performed at the edges to a provider's

network on the assumption that within the network there is sufficient capacity

to cope with the amount of higher-quality traffic that has been sold. The

remainder of the capacity can be filled with regular best-effort traffic.

One big advantage

of differentiated services over reservations is that routers do not need to

keep per-flow state, or look at source and destination addresses to classify

the traffic; this means that routers can be considerably simpler. Another big advantage is that the billing

arrangements for differentiated services are pairwise between providers at

boundaries - at no time does a customer need to negotiate a billing arrangement

with each provider in the path. With reservations there may be ways to avoid

this too, but they are somewhat more difficult given the more specific nature

of a reservation. A good overview of the network service model for

Differentiated Services (DiffServe) is given in [difserv].

Network support

is not supposed to be a major part of the MECCANO project, but it is an

essential ingredient to provide adequate service. We describe here some of the

requirements and components we consider indispensable for MECCANO services. It

is essential that all the main nodes participating in MECCANO conferences have

reasonable connection to the Internet, and that the Internet has reasonable

performance.

The meaning of reasonable, is difficult to determine;

it depends on the performance desired. The key parameters are packets/sec,

variability in packet arrival (jitter in ms), mean transit time of packets,

stability of the Internet Connectivity of certain routes, availability of

multicast in the Internet Provider. These parameters have different impact on

the different services.

In international

services, the different paths often have very different performance

characteristics, moreover, alternate routing may be difficult to arrange

automatically - particularly with multicast. Hence it is essential that the

links are stable enough to have few outages of many seconds during a typical

session. If longer and more frequent interruptions of service take place, it is

very difficult to run a conference.

The normal

Internet topology with multicast provision is called the Mbone. This is a

single topology and has no differentiation between different users. Even if all

conferees have access to the Mbone, there remains the question of whether the

performance is adequate, and whether the demands on the bandwidth are too

great. If all the above are in order, nothing needs to be done to compensate.

Unfortunately, particularly the international Mbone is very variable between

countries, and they have different policies on how much bandwidth they provide

- both nationally and internationally. Thus we often need to supplement normal

Mbone by unicast tunnels. If this is done, we have to be very careful not to

upset the total Mbone topology.

With the present

European networks, the Mbone is often inadequate for reasonable conferencing.

Hence we often construct a significant part of the topology from unicast

routes. Both in MERCI and in MECCANO, we have used the experimental high-speed

networks originally JAMES, and more recently the VPN capability of TEN-155 to

construct a high-quality backbone. We must then construct reflectors at

strategic points to ensure full multicast facilities. If we still have access

to the Mbone at any site, we must ensure, by route filtering and scoping, that

the appropriate Mbone topology is not disturbed.

The next

parameter is throughput - in Kbps or packets/sec per audio stream; here the

requirements depend on the media and quality desired. For audio tools like RAT,

typical bandwidths needed are 8 - 32 Kbps; on good channels, with modern

codecs, the higher of these give good quality audio. For video using tools like

VIC, the corresponding rates are 50 Kbps - 3 Mbps. At the lower bandwidths,

with slowly varying scenes, a few frames/sec can be achieved with QCIF, at the

higher rates, full motion can be achieved at reasonable definition. The optimal

media data rates for specific bandwidth depend also on the level and nature of

channel errors. With audio, data arriving delayed or not at all is dropped.

However, it is possible to provide deliberate redundancy in the talk-spurts to

compensate for the loss. With video, similar errors will impact a whole frame.

For this reason, while inter-frame coding will give much better performance at

low error rates, it can be very inefficient at high ones. For this reason, most

current implementations of tools like VIC do not currently use inter-frame

coding; therefore higher data rates are needed for a given quality. We have

done many measurements on data quality as a function of packet loss. We find

loss rates of 15% still give reasonable quality of audio, with losses up to 40%

tolerable with good use of redundancy. With no inter-frame coding, loss rates

of up to 20% give quite tolerable video.

The next

parameter is variability of delay, called jitter. In most of the audio tools,

one can trade tolerance to jitter against delay. A jitter above a hundred ms

in a replayed packet is so annoying, that this may be taken as an approximate

cut-off point. If most packets have a variability of arrival between 50 and 150

ms, for example, then one can deliberately put in a delay of 150 ms in the

replay buffer. Any packets arriving more than 150 ms late will be dropped.

Similar considerations apply to video. If the delay is made too long, it is

very annoying for fully interactive sessions; it is much less serious for

one-way activities like lectures. A jitter above the cut-off point is exactly

equivalent to packet loss.

The

considerations for shared work-space tools are different. Here because the

individual operations must be kept consistent, some form of Reliable multicast

is normally used. Now it is the total rate of updates, packet loss, and

connectivity that are the important factors. Overmuch packet loss will increase

the traffic level on the network; the load is normally much less than that due

to the audio and video.

So far we have

considered standard use of the

Internet. In fact there are many activities to introduce mechanisms for

providing “Quality of Service” (QoS), as mentioned in Section 3.2. Some of

these rely on mechanisms introduced at the access point of the networks; most

DiffServe algorithms come into this category. Others rely on setting up

reservations throughout the networks; most IntServ algorithms come into this

category. So far none of the regular National or International IPv4 networks

used in MECCANO support such mechanisms. With the next generation of Internet

Protocols, those based on IPv6 [ipv6], there is support for this facility at

the IP level. For this reason, there is starting to be support on experimental

networks running IPv6 for QoS. There is no reason why the same support cannot

be provided on IPv4 networks; it is just not happening.

We would like to

explore in MECCANO the scope for improving the quality of conferencing by

providing QoS support. In view of the above, there will be a deliberate

activity in the project to support IPv6 and its QoS enhancements. This work

will have many aspects. Clearly it will be necessary to provide IPv6-enabled

routers; at the very least they can apply DiffServ algorithms at the borders to

the WANs. It will be necessary to tunnel through any intermediate networks that

support only IPv4. When VPNs are used, as with some of the TEN-155 activity, it

may be possible to use IPv6 throughout.

It is possible to

provide IPv4 enabled applications inside the local areas of conferees, and then

to change to IPv6 at the edges to the wide-area. This approach will be followed

initially, providing only very crude clues to where QoS is to be used - like

favouring audio over video. This can be achieved by prioritising streams in the

router based on the multicast group used with techniques such as Class-Based

Queueing (CBQ) [cbq]. Another aspect will be to make the applications able to

signal their own needs for QoS; thus audio codecs may decide which of their

streams require the better service, and to mark them accordingly. The lower level

software may then assign the different streams to different multicast groups;

the routers may then apply the relevant QoS policies. Because IPv6 provides

support for QoS at the IP level, many operators will not support QoS except on

IPv6-enabled parts of their networks. For this reason, another part of the

MECCANO project will be to ensure that the applications themselves can support

IPv6 directly. In many cases the implementation work required will be carried

out in other projects; the results will be used experimentally also in MECCANO.

Of course we

support the usual aggregation of LAN and WAN technologies which support

multicast, and are normally encountered in organisations. In addition, we will

support two others: unicast ISDN and Direct Broadcast Satellite access. The

salient point of each is discussed below.

Many

organisations, particularly industrial ones, do not support multicast - or will

not let it get through their firewalls. For this reason one useful device that

will be provided is one that does unicast<->multicast conversion. Further

details are given in Section 8. Others will want to participate in MECCANO via

unicast ISDN; this may be because their organisation will not allow direct

Internet access from inside their organisations, or may want to participate

from home. For this reason, we will provide such access facilities; the

gateways needs to provide them are discussed in Section 8.5.

The nature of

participation in multimedia conferences is that it is possible to participate

at different levels of service - and therefore of bandwidth need. It is

possible to participate with only audio - but get much more out of it if one

can also receive video or high quality presentation material. Moreover, we have

already said that the availability of network services is very variable; in

many areas of Western European countries, and in even larger areas when one

goes further East, the bandwidth available to individuals through the Internet

will not support even medium quality multimedia. For this reason, there is

considerable scope for supporting access mechanisms which are not symmetric. In

the future, we expect that mobile radio, xDSL and Cable TV access will become

very useful in this context; during the MECCANO project, we will not use such

mechanisms since we do not have access to them. We will, however, make use of

DBS services.

In a DBS,

equipment at the up-link site is a normal participant of the conference, and

receives all the multicast data streams; the up-link then retransmits all the

normal multicast digital stream via the satellite channel. Any participants via

the DBS system have a DBS receiving terminal which has a built-in multicast

router, and a separate Internet link. If there is no nearby multicast facility

on the Mbone, a unicast tunnel may need to be set up. Normally, as discussed in

Section 3.1, routers must prune any unicast traffic down-stream from them

towards leaf nodes; this uses the symmetric nature of most Internet

connections. In the DBS case, alternative mechanisms must be used. The full

details of the technology will be reported in the Deliverables of WP6, but an

overview is given below. Two approaches to solve the problem were

considered. The first one is based on routing protocol modification and the

other on tunneling.

In the first approach each

routing protocol is modified in order to take into account the unidirectional

aspect of the underlying network. Modifications to protocols such as RIP, OSPF

and DVMRP were proposed and implemented before the start of the MECCANO

project. The modified routing protocols are operational. The experimentation is

described in [udlr]www.inria.fr/rodeo/udlr. However, The second

approach was more attractive: a link layer tunneling mechanism that hides the

link uni-directionality and allows transparent functioning of all upper layer

protocols (routing and above).

Tunneling is a means to

construct virtual networks by encapsulating the data. Broadly speaking, data is sent by the network

layer instead of the data-link layer.

This generally allows new protocols experiments and provides a quick

simple solution to various routing problems by building a kind of virtual

network on top of the actual one. The aim of using tunneling is to make routing

protocols work over unidirectional links without having to provide any

modification to them.

The tunneling approach adds

a layer between the network interface and the routing software on both feed and

receiver sides (or between some intermediate gateways), resulting in the

emulation of a bi-directional satellite link where only an unidirectional link

is available. Packet encapsulation is

hiding the actual topology of the network in order to elude the routing

protocols and make them behave as if there exists a bi-directional satellite

link. The

tunneling mechanism we proposed is described in detail in an Internet draft of

the udlr working group [duros99]. Here follows a short description of the

solution.

Basically, routing traffic

is sent from the receivers to the feed on the virtual link and is later

captured by the added layer interface, which encapsulates it and sends it on

the actual reverse link. The feed station

then decapsulates it and transmits it to the routing protocols as if this was

coming from the virtual satellite link. As a receiver needs to sends a routing

message to a feed, the packet is encapsulated in an IP packet whose IP source

address is the receiver bi-directional address and IP destination address is

the feed bi-directional address. The datagram is then sent to the end point of

the tunnel via the terrestrial network. As it is received by the feed, the

payload of the datagram is decapsulated. The new IP packet that is obtained is

routed according it its destination address. The packet is then routed locally and not forwarded if the

destination address is the feed itself. The IP stack passes the packet to

higher level, in our case the routing protocol.

Similarly to routing

protocols modifications, as they discover feeds dynamically, receivers should

be capable of setting up tunnels dynamically as they boot up. The only way a

receiver can learn the tunnel end point is to define a new simple protocol.

Feeds periodically advertise their tunnel end point (IP address) over the

satellite network. As a receiver gets this message, it checks if the tunnel

exists, if not it creates a tunnel and uses it.

Routing protocols usually

have mechanisms based on timeouts to detect if a directly connected network is

down. If the satellite network is not operational and receivers keep on sending

their routings messages via regular connections, feeds will continue to send

packets on its unidirectional interface. This is undesirable behaviour because

it will create a black hole. In order to prevent this, when receivers have not

received routing messages from the satellite network for a defined time, they

turn their tunnel interface off. As a result, feeds receiving no routing

messages from receivers delete in their routing tables all destinations

reachable via the satellite network.

Having a tunnel between a

receiver and a feed is very attractive because the unidirectional link is

totally hidden to applications. As far as routing protocols are concerned they

have to be correctly configured. For instance, for RIP, a feed must announce

infinite distance vectors to receivers, this way receivers do not take into

account destinations advertised by feeds.

In the MECCANO

project, we will provide as good quality links as we can to any available DBS

up-links. There will certainly be one at INRIA, but there may be ones also at

other sites. We will also equip a number of sites with DBS receivers; this will

certainly include most of the MECCANO partners, but may include other sites

too. There will therefore be a DBS overlay in addition to any other network

facilities provided.

So-called

real-time delivery of traffic requires little in the way of transport protocol.

In particular, real-time traffic that is sent over more than trivial distances

is not retransmittable.

With packet

multimedia data there is no need for the different media comprising a

conference to be carried in the same packets.

In fact it simplifies receivers if different media streams are carried

in separate flows (i.e., separate transport ports and/or separate multicast

groups). This also allows the different

media to be given different quality of service. For example, under congestion, a router might preferentially drop

video packets over audio packets. In

addition, some sites may not wish to receive all the media flows. For example, a site with a slow access link

may be able to participate in a conference using only audio and a whiteboard

whereas other sites in the same conference with more capacity may also send and

receive video. This can be done because the video can be sent to a different

multicast group than the audio and whiteboard.

This is the first step towards coping with heterogeneity by allowing the

receivers to decide how much traffic to receive, and hence allowing a

conference to scale more gracefully.

Best-effort

traffic is delayed by queues in routers between the sender and the

receivers. Even reserved priority traffic

may see small transient queues in routers, and so packets comprising a flow

will be delayed for different times.

Such delay variance is known as jitter and is illustrated in Fig. 3

Figure 3 Network Jitter and Packet Audio

Real-time

applications such as audio and video need to be able to buffer real-time data

at the receiver for sufficient time to remove the jitter added by the network

and recover the original timing relationships between the media data. In order to know how long to buffer, each

packet must carry a timestamp, which gives the time at the sender when the data

was captured. Note that for audio and

video data timing recovery, it is not necessary to know the absolute time that

the data was captured at the sender, only the time relative to the other data

packets.

Figure 4

Inter-media synchronisation

As audio and

video flows will receive differing jitter and possibly differing quality of

service, audio and video that were grabbed at the same time at the sender may

not arrive at the receiver at the same time.

At the receiver, each flow will need a playout buffer to remove network

jitter. Adapting these playout buffers

so that samples/frames that originated at the same time are played out at the

same time (see Fig. 4) can perform inter-flow synchronisation.

This requires

that the time base of different flows from the same sender can be related at

the receivers, e.g. by making available the absolute times at which each of

them was captured.

The transport

protocol for real-time flows is RTP

[schu96-1]. This provides a standard format packet header which gives

media specific timestamp data, as well as payload format information and

sequence numbering amongst other things.

RTP is normally carried using UDP. It does not provide or require any

connection set-up, nor does it provide any enhanced reliability over UDP. For RTP to provide a useful media flow,

there must be sufficient capacity in the relevant traffic class to accommodate

the traffic. How this capacity is

ensured is independent of RTP.

Every original

RTP source is identified by a source identifier, and this source id is carried

in every packet. RTP allows flows from

several sources to be mixed in gateways to provide a single resulting

flow. When this happens, each mixed

packet contains the source IDs of all the contributing sources.

RTP media

timestamp units are flow specific - they are in units that are appropriate to

the media flow. For example, 8kHz

sampled PCM-encoded audio has a timestamp clock rate of 8kHz. This means that inter-flow synchronisation

is not possible from the RTP timestamps alone.

Each RTP flow is

supplemented by Real-Time Control Protocol (RTCP) packets. There are a number of different RTCP packet

types. RTCP packets provide the

relationship between the real-time clock at a sender and the RTP media

timestamps so that inter-flow synchronisation can be performed, and they

provide textual information to identify a sender in a conference from the

source id.

There are a

number of detailed documents on the RTP protocol, which has been adopted both

for ITU and Mbone conferencing. Some of these are:

·

[schu96-1], which gives the packet format for real-time traffic used in

RTP and RTCP.

·

[schu96-2], which specifies the RTP profile for AV traffic

·

[rtpf] There are a whole series of reports, which specify the payload

formats for specific codecs.

IP multicast

allows sources to send to a multicast group without being a receiver of that

group. However, for many conferencing

purposes it is useful to know who are listening to the conference, and whether

the media flows are reaching receivers properly. Accurately performing both

these tasks restricts the scaling of the conference. IP multicast means that no-one knows the precise membership of a

multicast group at a specific time, and this information cannot be discovered;

to try to do so would cause an implosion of messages, many of which would be

lost. A conference policy that restricts conference membership can be

implemented using encryption and restricted distribution of encryption keys;

this is discussed further in Section 9. However, RTCP provides approximate

membership information through periodic multicast of session messages; in

addition to information about the recipient, these also give information about

the reception quality at that receiver.

RTCP session messages are restricted in rate, so that as a conference

grows, the rate of session messages remains constant, and each receiver reports

less often. A member of the conference

can never know exactly who is present at a particular time from RTCP reports,

but does have a good approximation to the conference membership. The is analogous to what happens in a

real-world meeting hall; the meeting organisers may have an attendance list,

but if people are coming and going all the time, they probably do not know

exactly who is in the room at any one moment.

Reception quality

information is primarily intended for debugging purposes, as debugging of IP

multicast problems is a difficult task. However, it is possible to use

reception quality information for rate adaptive senders, although it is not

clear whether this information is sufficiently timely to be able to adapt fast

enough to transient congestion.

The Internet is

very heterogeneous, with link speeds ranging from 14.4 Kbps up to 1.2 Gbps, and

very varied levels of congestion. How

then can a single multicast source satisfy a large and heterogeneous set of

receivers?

In addition to

each receiver performing its own adaptation to jitter, if the sender layers

[mcca95] its video (or audio) stream, different receivers can choose to receive

different amounts of traffic and hence different qualities. To do this, the sender must code they video

as a base layer (the lowest quality that might be acceptable) and a number of

enhancement layers, each of which adds more quality at the expense of more

bandwidth. With video, these additional

layers might increase the frame rate or increase the spatial resolution of the

images or both. Each layer is sent to a different multicast group, and

receivers can decide individually how many layers to subscribe to. This is illustrated in Fig. 5. Of course, if they are going to respond to

congestion in this way, then we also need to arrange that the receivers in a

conference behind a common bottleneck tend to respond together. This will

prevent de- synchronised experiments by different receivers from having the net

effect that too many layers are always being drawn through a common

bottleneck. Receiver-driven Layered

Multicast (RLM) [mcca96] is one way that this might be achieved, although there

is continuing research in this area.

`

`

Figure 5 Receiver

adaptation using multiple layers and multiple multicast groups

Fundamental to

the transmission of audio and video streams over digital networks is the use of

coders and decoders; their combination is called a codec. These are devices that sample the analogue signals, and

process the resulting digital streams. This processing, which is done in the

codec, will require variable amounts of processing power, and produce output

with different properties. The algorithms used in different codecs are beyond

the scope of this Deliverable. There is a detailed discussion of different

audio and video codecs in [acoder] and [vcoder]. Because it is inevitable that

there are some losses in data transmission, various techniques are invoked to

overcome the impact of errors. Many of these need not be discussed here; they

impact strongly the interaction between the coder and the decoder, but have

little impact on the conferencing architecture. There are some techniques,

however, which do have such impact - particularly if the network

characteristics are variable.

One aspect is

that different codec algorithms have different compression factors; i.e. for a

given picture, different amounts of data are generated. If one part of the

network is able to transmit at one speed without undue network error, and

another has a lower capacity, it may be necessary to use different coding

algorithms in the two regions. To mediate between the two may require decoding

and re-coding (though this may be possible completely in the digital domain).

Devices that carry out this process are called transcoders [amir98].

Another property

of codecs is that they may be scaleable, producing different layers of coding.

A receiver may process one, some or all of these layers. With well-structured

layered coding, processing one layer will provide a minimal quality of media;

processing more layers will provide progressively better quality. If all the

layers are sent over one multicast group, then a layered codec may not be

architecturally different from other codecs for the purpose of this paper.

However, if it is easy for an intermediate node to recognise the different

layers, then it may be easy to provide digitally the equivalent of transcoding.

It is also possible to send different layers to different multicast groups. By

subscribing only to some groups, a receiver may avoid overloading the network,

or its own processor. Alternately, by passing only certain multicast groups, an

active element in the network may ensure the protection of a lower capacity

region. Both these mechanisms do have impact on the architecture of multicast

conferencing. In addition, if the network does not support hierarchical

protection, unequal error protection

has to be added at the transmitter such that the more important layers are

protected by a stronger channel code [scalvico, rs].

Since digital

video has by far the largest data rate of all media, it is essential to use

scalable video coding. This is not only true for video transmission but also

for the processing at encoder and decoder. Especially at the encoder side,

which is usually much more complex than the decoder, scalable software is

essential, if it should be used on various platforms. A scalable coder should

choose its coding algorithms dynamically according to the available processing

power and produce hierarchical bit streams for different transmission rates

[scalvico].

Codec algorithms

are deeply concerned with providing the optimal digital rendering of the media

streams in terms of certain criteria. These criteria certainly include faithful

reproduction, minimising bandwidth, reducing computation, and robustness to

errors. The robustness can be provided by providing various forms of redundancy

[rosen98]. The redundancy may be independent of the contents; there are various

codes which can recover from successive bit errors, for example. Alternately

one can use knowledge of the characteristics of the media to limit the amount

of redundancy transmitted. For example, with speech, if the previous audio

frame is similar in characteristics to the current frame we do not bother to

send a redundant copy; receiver-driven error concealment will provide an

acceptable alternative in case of loss. In a similar manner, we could use

knowledge of the characteristics of a speech signal to determine the priority

to insert into the IPv6 flow label header. This would allow a router with

class-based queuing to give priority to those packets which are perceptually

more important. This is the audio analogue to giving different QoS to MPEG P

and I frames [mpeg].

Applications other than audio and video have evolved

in Internet conferencing, conferencing, ranging from shared text editors (NTE

[han97]) to shared whiteboards (WB [floy95], DLB [gey98]) and support for

dynamic 3D objects (TeCo3D [mau99]). Such applications can be used to

substitute for meeting aids in physical conferences (whiteboards, projectors)

or replace visual and auditory cues that are lost in teleconferences (e.g.

raising hands [mates], voting [mates, mpoll] and a speaker list); they can also

enable new styles of joint work.

Non-A/V applications currently have vastly different

design philosophies. This leads to a multitude of architectures and proprietary

protocols both at the transport and at the application level. It is therefore a

challenging task to combine them into a homogeneous teleconferencing toolset.

Especially the development of generic services, like recording and playback of

conferencing sessions, is currently impossible. These problems can be traced to

two related areas: reliability and application layer protocols for non-A/V

applications.

Many non-A/V applications have in common that the

application protocol is about establishing and updating a shared state. Loss of

information is often not acceptable, so some form of multicast reliability is

required. However, the applications' requirements differ. Some applications

just need a guarantee that each application data unit (ADU) eventually arrives;

others require that the ordering of the ADUs transmitted by a single

participant will be received in order. Applications might even demand that the

total order of all ADUs send by all participants is preserved. Additionally

applications have different requirements on the timeliness with which the

packets need to be delivered.

Closely related to the reliability requirements of

an application is the problem of which part of the system is responsible for

providing the reliability. On the one hand there are approaches to provide

reliability at the transport level (layer 4). These approaches basically

provide an interface similar to TCP. The positive aspect of realising

reliability in this way is the simplicity of the interface and a very clean

(layered) software architecture.

On the other hand approaches exist which require the

application to be network aware and

help with repairing packet loss. This is especially desirable if the repair

mechanism does not rely exclusively on the simple retransmission of the lost

packet(s) but also on application level information. In a shared whiteboard,

for example, it might be desirable to repair packet loss, which relates to the

state of the pages visible to the local user on a higher priority than packet

loss for other pages. An additional benefit of being network aware is the possibility of mapping ADUs to transport PDUs

on a one-to-one basis (application level framing, ALF [clark90]). If each transport

PDU carries information which is useful to the application independent of any

other transport PDUs, the application can usually process them out of order,

significantly increasing the efficiency for some applications.

A possible solution to the problem of diverse

approaches to provide reliability in a multicast environment could be a

flexible framework for reliable multicast protocols. As proposed by the

Reliable multicast Framing Protocol Internet-Draft [crow98], a framing protocol

could be used to provide a similar service to different reliable multicast

approaches as RTP provides to different A/V encodings. Ideally this would

result in a flexible, common API for reliable multicast where each application

can choose from a set of services (such as one-to-many bulk transfer, or

many-to-many user interaction).

The question of how to realise the reliability for

different applications, given the wide range of reliability requirements, is

one of the topics where work is still in progress in the IRTF research group on

Reliable multicast [rmrg]. Other aspects of reliable multicast, which are not

well understood, include how to provide congestion control in a multicast

environment. As these issues are considered essential elements, standards track

protocols are not expected before these can be solved.

The second reason why non-A/V applications are so

diverse, and services like generic recording are currently not possible, is the

lack of a commonly accepted application level protocol (an RTP-like protocol framework

for non-A/V applications). While the need for such a protocol has

been expressed by many application developers, it is currently not addressed by

any standards track activities.

Most conferencing solutions, which allow for

collaboration between participants in a conference, provide either a single

application distributed amongst the participants (Application Sharing) or a distributed data set on which all may

work (Workspace Sharing).

Some conferencing tools allow additionally for

text-based communication exchange, file transmission, acquisition of

distributed information and other mechanisms to distribute or collect

(non-audiovisual) information in the context of a running conference.

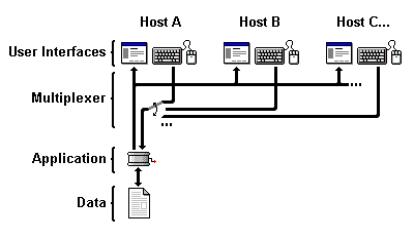

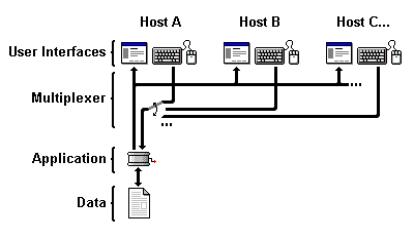

As shown in Fig. 6, Application Sharing basically works by distributing the user interface of a single application.

While that program is still running on a single machine, it may now be seen by

multiple conference participants. If permitted by the user actually running the

application, it may also be operated by several users - either simultaneously

or sequentially, depending on the chosen floor control policy.

Figure 6 Data

flow in an Application Sharing

scenario

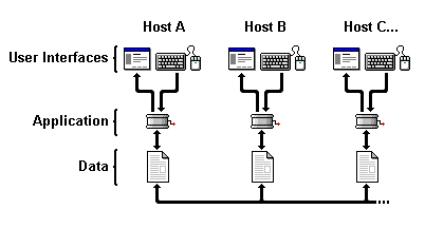

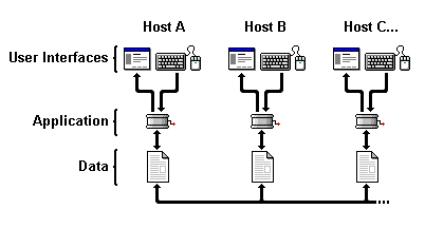

On the other hand, Workspace Sharing tools distribute a common data set among the

participants of a conference, as illustrated in Fig. 7. Each partner has a

local representation of the shared workspace and may modify it at will

(depending on the access control policy). By the exchange of messages, the

sites try to achieve a consistent workspace state.

Figure 7 Data

flow in a Workspace Sharing scenario

The shared

workspaces described within this chapter allow several participants to

modify simultaneously one or multiple

documents in the context of a running

conference (synchronous collaboration). There are other (asynchronous)

systems [bscw][linkworks] defining a workspace

as a set of documents (in terms of

files created by an arbitrary non-distributed application) which can be consecutively

modified by the members of a working group. In contrast to a mere distributed

file system, these tools implement versioning and access control, support

routing and workflow mechanisms, provide electronic signatures for individual

documents, allow for approval and disapproval of modifications, keep track of

deadlines and the status of documents and inform group members of any document

changes they might be interested in.

Tools for Application

Sharing and Workspace Sharing

share a number of common issues.

5.2.1.1 (Adaptable) Reliability of Message Transfer

In contrast to real-time multimedia streams,

where lost packets just degrade the

perceivable quality of an audio/video transmission, application and

workspace-sharing techniques usually require a certain level of transport

reliability, at least. Otherwise, users would be faced with incomplete

documents or antiquated application states and actually be unable to

collaborate.

Only few applications (e.g. multicast file

transfer) need every packet be

delivered reliably (and in the original order), most systems want all

participants to share a common and actual

status (e.g. the current position of a shared mouse pointer) - packets carrying

obsolete status information (e.g., former pointer positions) may get lost

without adverse consequences.

Some tools designed for Distributed Interactive Simulation assign priorities to certain status records (depending on their importance

for a successful collaboration) and require only high-priority information to be

delivered reliably. While a successful reception of low-priority data may

increase the quality, it is not vital for the simulation as a whole.

In the extreme case, some battlefield simulations

do without any mechanism to achieve a reliable transmission, since most aspects

of such a simulation can be calculated from physical models and only behavioural changes have to be transmitted. As these are

rare compared to the capacity of the networks and computers used, they can be

sent multiple times assuming that eventually every participant will have got

the information at least once.

5.2.1.2 Scalability

Most application and workspace-sharing tools

support a small number of users only - with numbers ranging from two to a few

tens - often limited by the transport protocol used. multicast applications

have the advantage, in principle, of supporting an unlimited number of

participants, as long as these sites only receive packets passively from other

active participants and do not try to send packets themselves. In practice, current

techniques for reliable multicast still limit the number of participants to a

few hundred.

5.2.1.3 Floor and Access Control

If multiple conference participants may operate a

shared application or modify objects in a shared workspace, there is a need to

coordinate these activities.

Some systems use the concept of an explicit floor holder to restrict activities to a

single user - with varying policies for the initial assignment of the floor and different (social) protocols

for passing it between session participants.

Multicast tools usually do without such a concept

as it does not mesh well with the idea of lightweight

multicast sessions. In a multicast environment it is often better to forbid

or permit the modification of certain objects - or not to implement any access

restrictions at all.

5.2.1.4 Consistency of State Information among Session Participants

Packet loss and different packet transit times

may lead to inconsistent states at individual sites causing problems if these

partners start operating a shared application or modifying a shared workspace

themselves based on their improper state information.

Floor-controlled systems often check (and establish)

workspace consistency when passing the floor to a new floor-holder - other

session partners may continue with an inconsistent state as they must not apply

any modifications.

Centralised approaches rely on one (or multiple)

central servers holding the actual state which all other session participants (clients) are bound upon. Clients may use

these servers to update their own status information or check for consistency

before requesting a status change themselves.

Multicast tools (without any access restrictions)

often use global clocks to identify the latest status of a given object and

impose a strict ordering on operations from several users. In combination with a message counter (and some heartbeat mechanism) to detect packet loss these clocks help

individual sites to converge to a common

(workspace) state.

5.2.1.5 Integration of late joining

Participants into a running Conference

Usually, not all conference partners participate

in a conference from the beginning - it often happens, that some users join a

session which is already running. For an application sharing session this

implies that the late user first has

to receive the actual contents of the shared screen in order to be able to interpret mouse movements and operations

properly. Similarly, in a shared workspace environment the late user has to obtain the actual workspace status before she/he

will be able to apply any modifications.

5.2.1.6 Behaviour in case of Network Partitioning

A special case occurs, when certain sites become

disconnected temporarily from the remaining group, e.g. because of a router

failure. In a floor-controlled system this might lead to the situation, that

the floor holder gets disconnected and, thus, prevents all other participants

from operating a shared application or modifying a shared workspace. Some tools

solve this problem by automatically or manually reassigning the floor to a new

participant (e.g. in an application-sharing session the floor is usually given

back to the person actually running the shared application).

5.2.1.7 Synchronisation with other Media Streams

When used in the context of an audio/video

conference, it is sometimes necessary to synchronise the application or

workspace-sharing session with A/V streams transmitted simultaneously to

prevent a speaker talking about a state not yet seen by his/her listeners. At

present, however, we know of only one system which provides such synchronisation,

the commercial product MarratechPro [marra].

5.2.1.8 Recordability

If an application or workspace-sharing tool

requires some kind of transport reliability, it is no longer sufficient for a

conference recorder just to store any incoming packet - instead, it has to understand the protocol, at least, in

order to detect packet loss and take the appropriate measures (such as to

request the retransmission of any lost packet).

Depending on the actual application, a conference

recorder might need even more intelligence

- e.g. for late joining a running

conference and acquiring enough information to successfully perform the

recording from then on.

Additional paradigm-specific issues are mentioned

in the next two sections.

As mentioned above, application sharing basically works by distributing the user

interface of a single application; any changes in the appearance of a running

application (e.g., a moving mouse pointer, new contents of a document window,

any dialogue windows or menu boxes that appear, or interface elements that

change their look when being used) are reported to all participants of a

sharing session. If the session further supports the remote operation of a

locally running program, any mouse movements and key presses performed by a remote

conference partner must be sent back and fed into the application as if they

had originated from the local machine. It depends on the chosen policy whether

all other partners may simultaneously operate a shared program or whether the

right to control it is restricted to one participant at a time. The former

simplifies sessions with frequently changing operators, the latter avoids any

confusion resulting from multiple users trying to operate an application

simultaneously.

The most important advantage of application sharing is its concept of

working with arbitrary group-unaware

applications. As a consequence, there is no need for the development of new

(group-aware) tools - the user may continue using his/her favourite legacy

application instead. That is, provided that the programmer has used officially

documented programming interfaces only. It is often the case, where this has

not been done, that it is not possible to share action games and other

real-time applications because they rely on special programming tricks.

Session set-up and control is provided by a

different program (a session manager)

which is independent of the shared application itself. Sometimes, this session

manager also comes with additional group-aware

tools such as a shared whiteboard, a text-based message exchange (chat) or a

file transfer feature.

Depending on how they distribute the visual

appearance of a user interface, application-sharing

environments can be classified into two main categories: view-sharing and primitive-sharing

environments.

A view-sharing

(sometimes called screen-sharing)

environment takes screen snapshots from the system running the shared

application and sends them as a bitmap to all other participants. Such a

technique is simple to implement and relatively easy to keep

platform-independent. An important implementation of this concept is the

Virtual Network Computing (VNC) [vnc], developed by AT&T Laboratories

Cambridge (see below).

However, most implementations follow the primitive-sharing paradigm and

distribute graphics primitives which then have to be rendered at each site

individually. Due to its similarity to the way in which the X Window system

works, so called X multiplexers were

the first systems which implemented this approach. Today, numerous such systems

exist (XTV [aw99], Hewlett-Packard's SharedX [garf], Sun's SharedApp [sunf] to

mention just a few examples) for the UNIX platform and for IBM-compatible PCs

running Windows (Microsoft NetMeeting [netm] and other T.120-based tools, see

below), but most of them are platform-dependent as the graphics primitives used

for distributing an user interface usually resemble corresponding library calls

and their parameters on a given platform. An important exception from this rule

is the JVTOS system [froitz] which was developed during the RACE project CIO to

provide cross-platform application sharing between Sun and SGI workstations,

Apple Macintosh computers [wolf] and IBM-compatible PCs running Windows.

5.2.2.1 Issues

This section addresses a few problems of application-sharing environments in

addition to those mentioned in Section 5.2.1. See also [begole].

Screen resolution and

colour model

Since application

sharing works by distributing the graphical user interface of an

application, each partner's screen resolution and colour depth should be

compatible with that of the system running the shared application. Otherwise,

parts of a program's window might fall outside the available screen area or

colours may look considerably different at certain sites.

Centralised design

Application sharing sometimes suffers from its

client-server model: a single (server) site running the actual program

distributes its user interface to one or multiple client sites which may for

their part operate the shared application - if permitted. Any files needed

while running the application have to be stored on the server first - and any

results from the run have to be distributed to the participants again (which is

usually done outside the sharing session). If the central server gets

disconnected, other partners are unable to continue with their work and have to

wait for the server to become available again. Similarly, working with an

application is limited to the life-time of a conference session for remote

partners - unless they get all the files and install the application locally as

well, there is no possibility to continue with their work as soon as the

session has finished.

What-you-see-is-what-I-see

(WYSIWIS)

Both an advantage as well as a disadvantage of application sharing is the what-you-see-is-what-I-see effect: every

conference participant has the same view of a shared application, there is no

possibility of browsing through a document or

experiment with other settings without requiring all other partners to follow

these activities. While such a behaviour is explicitly desired during tutorial lectures, it may become

cumbersome in distributed workgroup

sessions.

No concurrent work

An important side-effect of WYSIWIS behaviour is

the restriction to a single position in a document. There is no possibility for

the members of a group to work on different parts of a document simultaneously

by means of application sharing.

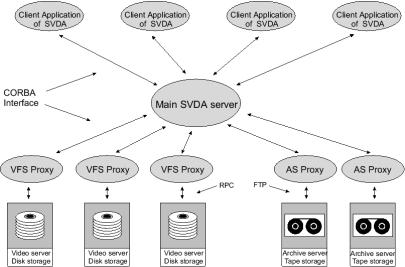

Despite these problems, application sharing is